- branching strategy

- bitbucket Runners

- pipeline yaml file

- flyway

- sqlcl

- rsync

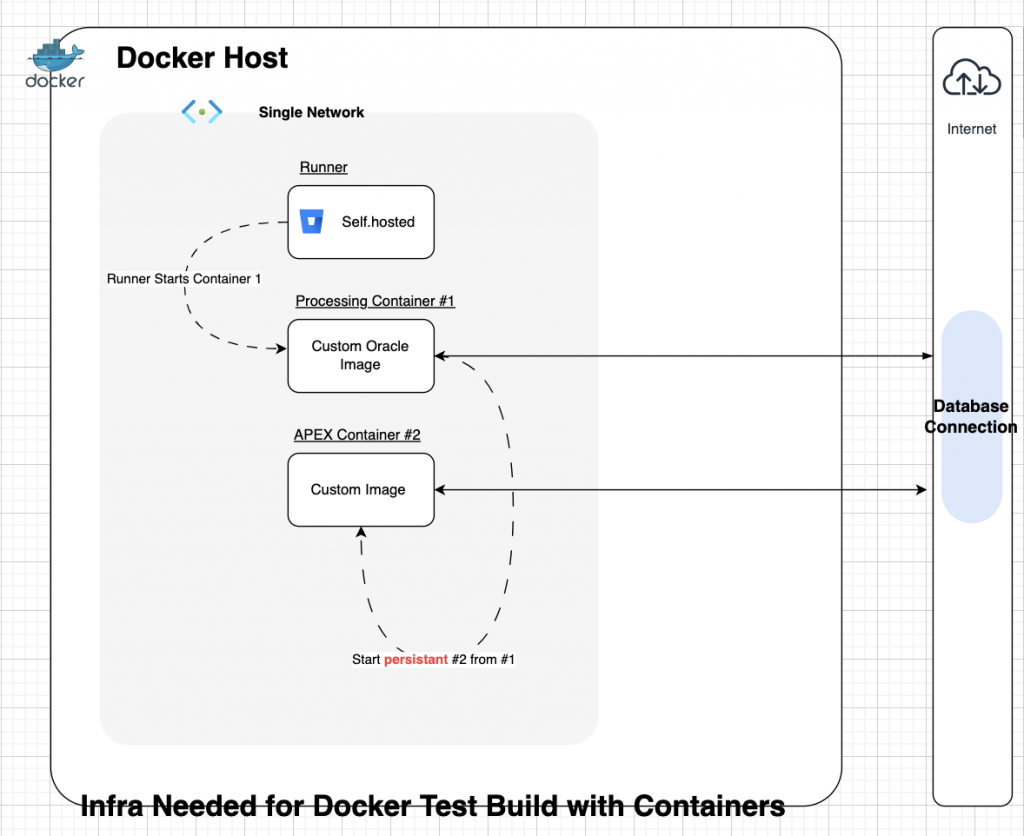

- oracel db + apex in container

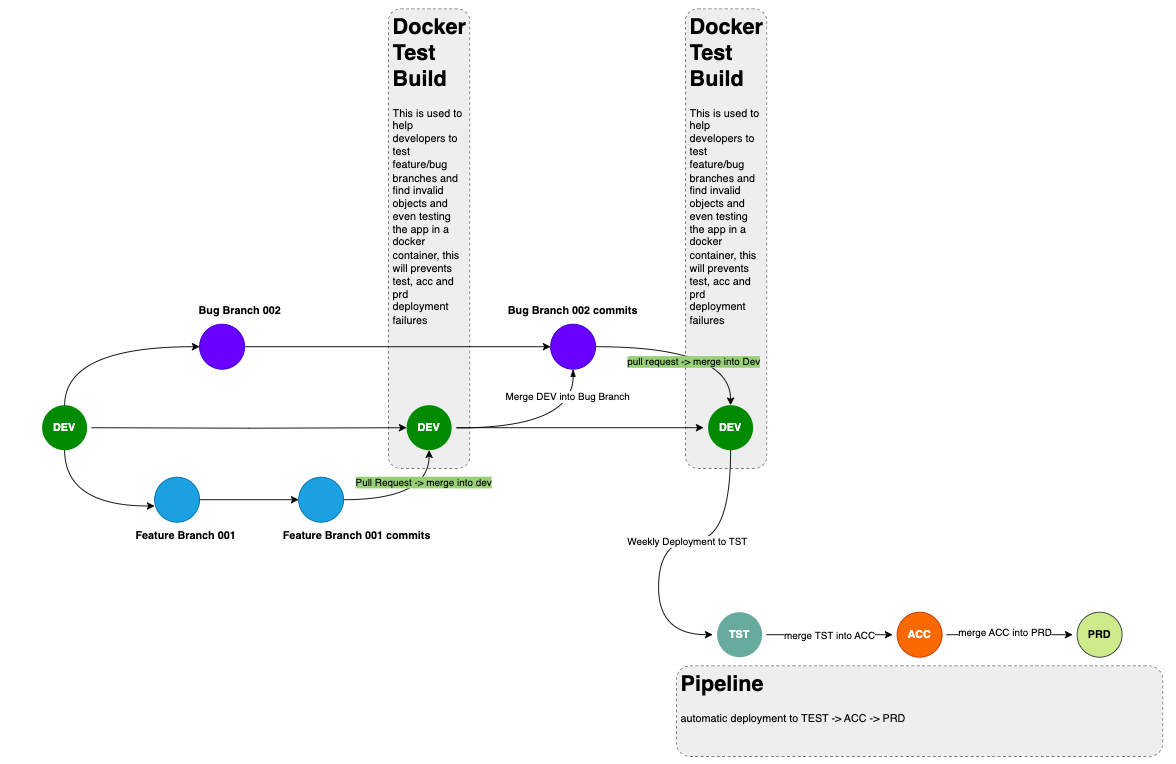

Branch Strategy

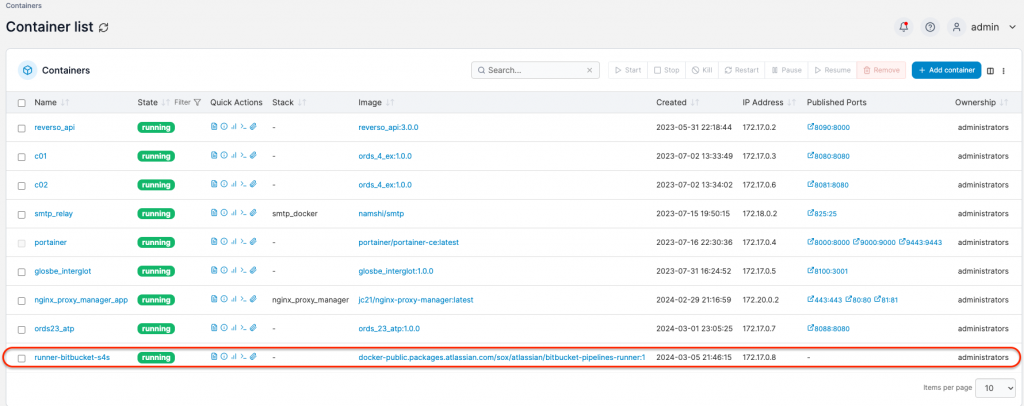

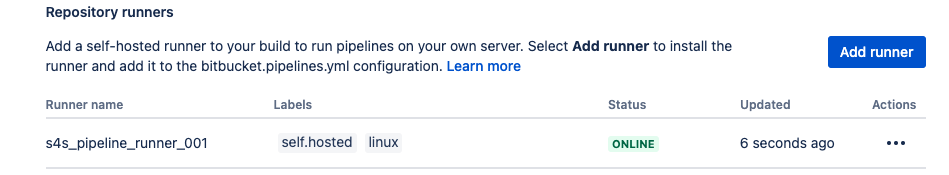

Runners

- configure your on primise running (follow the guide from bitbucket)

- it entire process takes 2 mins or less

- make sure all necessary ports are openning

- when all are set up correctly, you should see the status “online”

Portainer Container List

Create bitbucket-pipelines.yml

here is an example of a feature branch and with explainations.

- this pipeline snippt will do a spin up a docker container (XE 21, APEX 23.1 and ORDs are in this container)

- after container is ready ( ORDs is ready)

- we can then connect the database and install db objects + apex application

- copy static files to server

feature/*:

- step:

name: (commit) start docker container on host machine 2

runs-on:

- self.hosted

script:

- echo "find running container with this image stop it && remove it"

- ssh -o StrictHostKeyChecking=no chaoyuim@192.168.178.70 './my_custom_scripts/cleanup_container.sh chaoyuim/img_db21_apex23:1.0.0'

#- ssh -o StrictHostKeyChecking=no chaoyuim@192.168.178.70 'docker network rm database_network_1521 && docker network create database_network_1521'

#- ssh -o StrictHostKeyChecking=no chaoyuim@192.168.178.70 './my_custom_scripts/add_containers_to_network.sh chaoyuim/oracle_host:2.0.0'

- echo "done cleaning........... ready to start new container ........"

- ssh -o StrictHostKeyChecking=no chaoyuim@192.168.178.70 'docker run --network database_network_1521 --name db21_apex23_test_docker -d -p 8822:8080 -p 1521:1521 chaoyuim/img_db21_apex23:1.0.0'

- echo "write a function to get localhost:8822, release control only after httpcode 302 ( it runs 60 x 5 seconds) it stops earlier if 302 is received."

- ssh -o StrictHostKeyChecking=no chaoyuim@192.168.178.70 './my_custom_scripts/http_request.sh localhost:8822 60'

- echo "Database + ORDs is alive, do you thing , db is at port 1521 , apex at port 8822"

- step:

name: Feature test build on commit

runs-on:

- self.hosted

script:

- chmod +x ./00-generic/01-scripts/change_file_ext_to_sql.sh

- chmod +x ./00-generic/01-scripts/check_invalids.sh

- chmod +x ./00-generic/01-scripts/install_app.sh

- chmod +x ./00-generic/01-scripts/kill_ssh_tunnel.sh

- echo "build anytime a commit is done to a branch prefixed with feature"

- echo $BITBUCKET_BRANCH

- cat ~/.ssh/id_rsa.pub

- sleep 2

- echo "sleep done"

- ssh -o StrictHostKeyChecking=no -L 3306:192.168.178.70:1521 chaoyuim@<server_public_id> -fN

# after the ssh tunnel , i can connect to the db to install db ojects + apex

- sql sys/Apex_12345@localhost:3306/XEPDB1 as sysdba

# here , we copy files to static file server using rsync

- yum install rsync -y

- rsync -avruz -e "ssh -o StrictHostKeyChecking=no" --delete ./03-docroot/* opc@<server_public_id>:/home/opc/docker

- pkill -f "ssh -L" || true

Tagging in pipeline

pipelines:

default:

- step:

script:

- echo "Made a change in build ${BITBUCKET_BUILD_NUMBER}" >> changes.txt

- git add changes.txt

- git commit -m "Updating changes.txt with latest build number."

- git tag -am "Tagging for release ${BITBUCKET_BUILD_NUMBER}" release-${BITBUCKET_BUILD_NUMBER}

- git push origin release-${BITBUCKET_BUILD_NUMBER}- you should chose to create pipeline variables for sensitive data like pwd and username

- use it like

$CONTAINER_REGISTRY_USERNAMEonline 3 and 4

- use it like

- I have created a custom docker image with JAVA, sqlcl, sqlplus , git when they are required to perform task like check out branch , connect to database and rsync static files to file server

How flyway works?

- to make sure flyways works we need to change or database artifacts into .sql extension

- a quick chatgpt can fix it , it scans all child folders and change the extension

#!/bin/bash

# Check if the correct number of arguments is provided

if [ $# -ne 1 ]; then

echo "Usage: $0 <folder_path>"

exit 1

fi

folder_path=$1

# Check if the provided folder exists

if [ ! -d "$folder_path" ]; then

echo "Error: Folder '$folder_path' does not exist."

exit 1

fi

# Loop through all the files in the folder

find "$folder_path" -type f -exec sh -c '

for file do

if [ -f "$file" ]; then

# Check if the file extension is ".gitkeep"

if [[ "${file##*.}" == "gitkeep" ]]; then

echo "Skipping .gitkeep file: $file"

else

# Change the file extension to ".sql"

new_filename="${file%.*}.sql"

# Check if the new filename already exists

if [ -e "$new_filename" ]; then

echo "Warning: File '$new_filename' already exists. Skipping '$file'."

else

# Rename the file

mv "$file" "$new_filename"

echo "Renamed: $file -> $new_filename"

fi

fi

fi

done' sh {} +

echo "File extension change completed."

- after all files are changed to .sql use flyway cli to issue a migrate

## this is snippet from yaml file

- flyway -url=jdbc:oracle:thin:@$SERVICE_NAME -user=$DB_USER -password=$DB_PWD -locations=filesystem:./YOU_FILE_LOACTION migrate

#flyway will scan and execute .sql files in sub folders as well automaticallySQLcl to export / install APEX applications

# use sqlcl to log into your apex parsing schema to do export

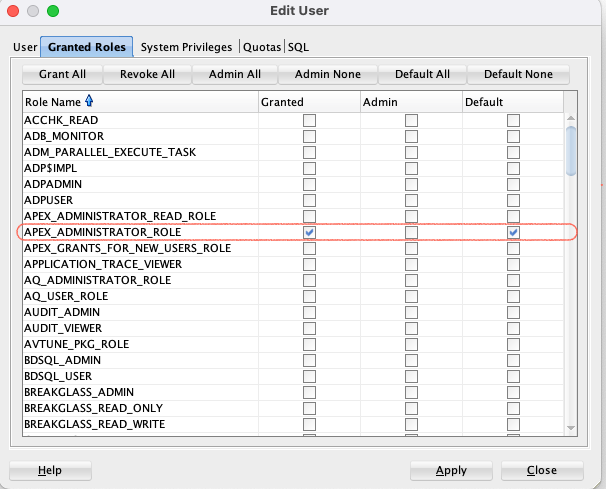

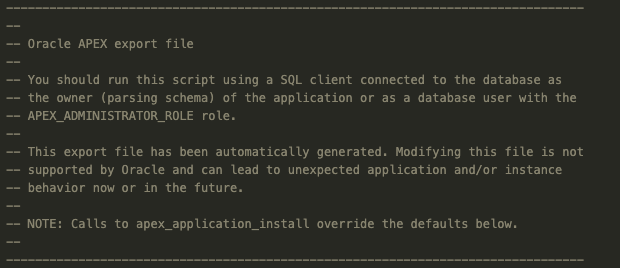

sql> apex export -applicationid 1234 -splitTo be able to install an exported application, the apex parsing schema need to have such role "APEX_ADMINISTRATOR_ROLE"

# to install

sql $DB_USER/$DB_PWD@$DB_SERVICE_NAME ./LOCATION_TO_YOUR/install.sql

YAML Examples

image:

name: chaoyuim/oracle_host:2.0.0

username: $CONTAINER_REGISTRY_USERNAME

password: $CONTAINER_REGISTRY_PASSWORD

pipelines:

branches:

develop:

- step:

name: Build develop on commit

runs-on:

- self.hosted

script:

- echo "build anytime a commit is done to develop"

- cd $BITBUCKET_CLONE_DIR

- echo "current dir $(pwd)"

- git fetch

- git checkout $BITBUCKET_BRANCH

- git pull

- ls -a

- flyway --help

feature/*:

- step:

name: Feature build on commit

runs-on:

- self.hosted

script:

- echo "build anytime a commit is done to a branch prefixed with feature"

pull-requests:

test:

- step:

name: test build on PR

runs-on:

- self.hosted

script:

- echo "test, this is a step will run each time a pull request is done to test"

develop:

- step:

name: develop build PR

runs-on:

- self.hosted

script:

- echo "develop, this is a step will run each time a pull request is done to develop"

feature/*:

- step:

name: Build for feature branch pull request (overlaps with PR dev?)

runs-on:

- self.hosted

script:

- echo "Hello, feature branch PR!"

default:

- step:

name: this runs on each commits on any branch

runs-on:

- self.hosted

script:

- echo "Hello, World!"Rsync to send static files to file server

- store customs images public key on to your file server

/.ssh/authorized_keys - install nginx on the static file server, configure ports open 22, 80 and 443

- create sub folders in html doc root , e.g ./dev ./test …

- change permission or change owner of these folders to your user will do rsync

- do rsync

## this is yaml file

- rsync -avruz -e "ssh -o StrictHostKeyChecking=no" --delete ./03-docroot/* opc@141.144.195.253:/home/opc/devCreate DB + APEX images

- Download Oracle Database XE .rpm file

- Download Oracle APEX .zip file

- create first Dockerfile and build it as base image so that we can install it

FROM oraclelinux:7-slim

USER root

RUN cd

WORKDIR .

ENV ORACLE_DOCKER_INSTALL=true

RUN yum -y install oracle-database-preinstall-21c

https://download.oracle.com/otn-pub/otn_software/db-express/oracle-database-xe-21c-1.0-1.ol7.x86_64.rpm

COPY ./*.zip ./

COPY ./*.rpm ./

COPY ./install_db.sh ./- create a container with this image

APEX installation Guide (sqlplus)

- install APEX

sql> alter session set container=XEPDB1;

sql> @apexins.sql SYSAUX SYSAUX TEMP /i/

sql> quit2. Set WorkSpace Admin account (only needed on fresh installs)

sql> alter session set container=XEPDB1;

sql> @apxchpwd.sql3. set user APEX_PUBLIC_USER

sql> alter session set container=XEPDB1;

sql> ALTER USER APEX_PUBLIC_USER ACCOUNT UNLOCK;

sql> ALTER USER APEX_PUBLIC_USER IDENTIFIED BY YOUR_PWD;

sql> quit