more information on oracle-base

PreRequisite

- data dump export from your source database

- a host (read here) with sqlplus installed ( try OCI vm instance with image Oracle Cloud Developer Linux, it has sqlplus , sqlcl pre installed) and able to connect to Autonomous Database

- an Oracle OCI object storage bucket (upload your database dump to this bucket, your bucket can be private, no need to set it public. Once uploaded, copy the link via object details button, the link is needed later)

- an Oracle OCI user who has MANAGE Buckets/Objects rights to this bucket

———————————————————————————-

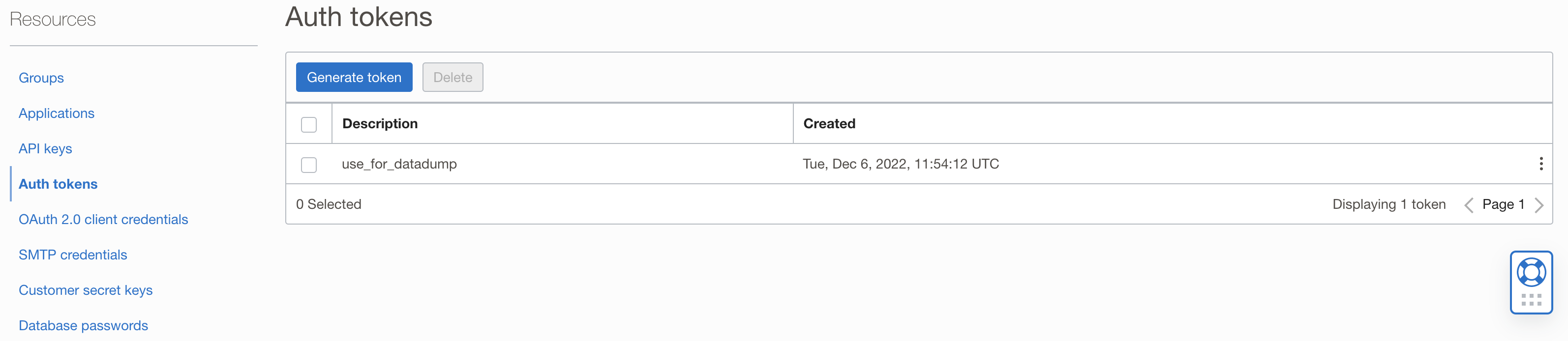

Step !: Create an Auth TOKEN from OCI user

save the token somewhere safe (you can copy it during creation, once created it can NOT be displayed anymore)

———————————————————————————-

Step 2: use sqlplus login to your autonomous database using !!!<ADMIN> account and run

begin

dbms_cloud.create_credential(credential_name => 'OBJECT_STORE_CRED'

,username => '${your objectuser}'

,password => '${your auth token}');

end;

/

# to list all created credentials

SELECT credential_name, username, comments FROM all_credentials;———————————————————————————-

Step 3: create .par file

On the host machine, create a .par file e.g my_import.par and type the following content ( you can also work without the par file, but then u need to type everything all at once )

Infor about the parameters:

Schemas is optional if your export is on schema level, remap_tablespace optional , remap_schema is optional as well. content optional, EXCLUDE is optional, table_exists_action optional. logfile optional

directory=DATA_PUMP_DIR

credential=OBJECT_STORE_CRED

schemas=${SOURCE_SCHEMA}

remap_tablespace=${SOURCE_TABLESPACE}:${TARGET_TABLESPACE}

remap_schema=${SOURCE_SCHEMA}:${TARGET_SCHEMA}

dumpfile=${URL To your Dump file on your object storage}

content=DATA_ONLY

EXCLUDE=CONSTRAINT,REF_CONSTRAINT,INDEX

TABLE_EXISTS_ACTION=[SKIP | APPEND | TRUNCATE | REPLACE]

LOGFILE=my_import_log.logTABLE_EXISTS_ACTION=[SKIP | APPEND | TRUNCATE | REPLACE]

SKIP is default value. If SKIP is used then table replacing is not done.

APPEND loads rows from the export files and leaves target existing rows unchanged.

TRUNCATE deletes existing rows in target table and then loads rows from the export.

REPLACE drops the existing table in the target and then creates and loads it from the export.

———————————————————————————-

Step 4: execute import command

when you have this .par file , type (It needs User ORACLE and export TNS_ADMIN folder )

impdp userid=ADMIN/${password}@${tns_name} parfile=${path to your .par file}before you run this, you probably need to do the following to disable triggers and constraints if you do a data only import

select t.*

from user_constraints t;

select *

from user_triggers t;

-- disable all Foreign key constraints

declare

l_sql clob;

begin

for rec in (select t.constraint_name

,t.table_name

from user_constraints t

where t.constraint_type in ('R')

and t.status = 'ENABLED'

and t.table_name not in ('flyway_schema_history'))

loop

l_sql := q'[ALTER TABLE ]' || rec.table_name || ' disable CONSTRAINT ' || rec.constraint_name;

-- dbms_output.put_line(l_sql);

execute immediate l_sql;

end loop;

for rec in (select t.constraint_name

,t.table_name

from user_constraints t

where t.constraint_type in ('P'

,'U')

and t.status = 'ENABLED'

and t.table_name not in ('flyway_schema_history'))

loop

l_sql := q'[ALTER TABLE ]' || rec.table_name || ' disable CONSTRAINT ' || rec.constraint_name;

-- dbms_output.put_line(l_sql);

execute immediate l_sql;

end loop;

end;

-- Enable all constraints

declare l_sql clob;

begin

-- first primary key and unique key

for rec in (select t.constraint_name

,t.table_name

from user_constraints t

where t.constraint_type in ('P'

,'U')

and t.status = 'DISABLED'

and t.table_name not in ('flyway_schema_history'))

loop

l_sql := q'[ALTER TABLE ]' || rec.table_name || ' enable CONSTRAINT ' || rec.constraint_name;

--dbms_output.put_line(l_sql);

execute immediate l_sql;

end loop;

for rec in (select t.constraint_name

,t.table_name

from user_constraints t

where t.constraint_type in ('R')

and t.status = 'DISABLED'

and t.table_name not in ('flyway_schema_history'))

loop

l_sql := q'[ALTER TABLE ]' || rec.table_name || ' enable CONSTRAINT ' || rec.constraint_name;

--dbms_output.put_line(l_sql);

execute immediate l_sql;

end loop;

end;

-- disable all triggers

declare

l_sql clob;

begin

for rec in (select t.trigger_name

from user_triggers t

where t.status = 'ENABLED')

loop

l_sql := 'ALTER TRIGGER ' || rec.trigger_name || ' DISABLE';

--dbms_output.put_line(l_sql);

execute immediate l_sql;

end loop;

end;

-- enable all triggers

declare

l_sql clob;

begin

for rec in (select t.trigger_name

from user_triggers t

where t.status = 'DISABLED')

loop

l_sql := 'ALTER TRIGGER ' || rec.trigger_name || ' ENABLE';

dbms_output.put_line(l_sql);

execute immediate l_sql;

end loop;

end;———————————————————————————-

Step 5: access the log file

As long as your connection is good, even there are errors during import, it should finish with a log file. You can check the log file by running this sql.

select *

from dbms_cloud.list_files('DATA_PUMP_DIR')

order by last_modified desc;Store your logfile to OCI object storage

run this plsql code to upload log file to your bucket storage and so that you can download it and view it in detail

begin

dbms_cloud.put_object('OBJECT_STORE_CRED'

,'${URL}'

,'DATA_PUMP_DIR'

,'${file name you see in previous sql}');

end;

/

e.g ${URL} ‘https://objectstorage.eu-amsterdam1.oraclecloud.com/n/aosdfasdf/b/DD/o/${anyname}.log’

Troubleshooting

if having ORA-28759: failure to open file

check sqlnet.ora file in /op/oracle/network/admin folder value of wallet location